Text-Guided 3D Texture Generation

Generating photorealistic textures for 3D objects from text prompts

🎬 Project Overview

This project explores how to generate high-quality, photorealistic textures for 3D objects using natural language prompts and diffusion models.

❌ Limitations of Baseline (TEXTure)

The baseline model, TEXTure, struggles with viewpoint inconsistency—textures generated from different views do not align well, leading to visual artifacts and unrealistic appearance in 3D space.

🔧 Our Approach

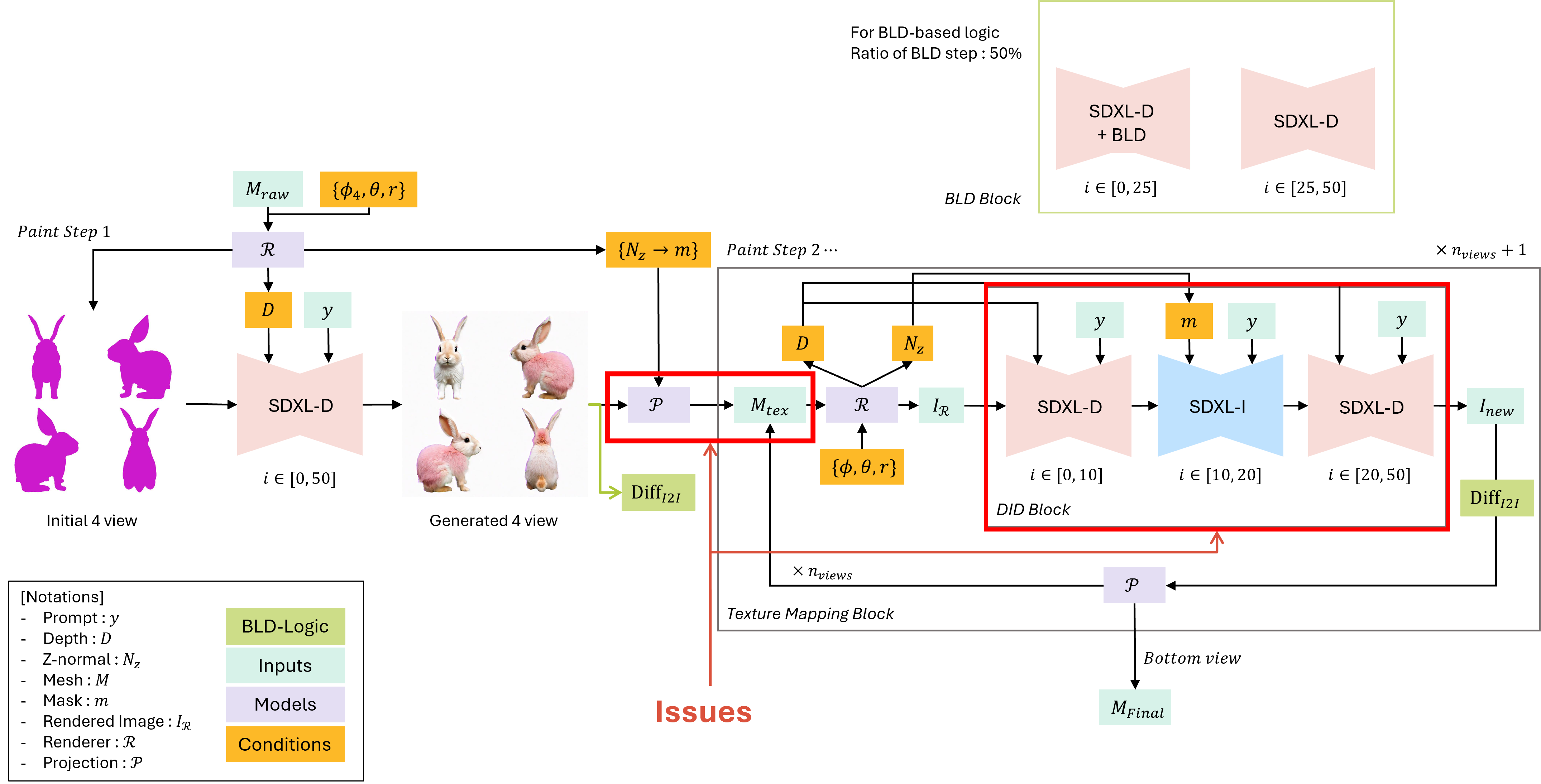

To overcome the limitations of TEXTure, our method introduces the following improvements:

- Multi-view 2×2 grid is applied during both training and inference to enforce texture consistency across views.

- Stable Diffusion 1.5 → SDXL: We replace the backbone with Stable Diffusion XL, which improves texture fidelity and detail.

- Delighting module is integrated to remove lighting inconsistencies across views, yielding more coherent and photorealistic results.