Digital images and videos are inevitably degraded in the process from content generation to consumption. The acquisition, transmission, scaling, format conversion, or compression of images and videos introduces various types of distortions, such as white noise, Gaussian blur, blocking artifacts, banding artifact and so on. Moreover, there are often multiple interacting distortions, which complicates the problem vastly. Since human observers are the ultimate receivers of digital images and videos, quality metrics should be designed from a human-oriented perspective. PI is one of the first pioneers applying deep neural networks to the domain of image quality assessment (Kim et al., 2017), (Kim & Lee, 2017), (Kim et al., 2018), (Kim et al., 2019), (Kim & Lee, 2017), (Kim et al., 2018), (Kim & Lee, 2017), (Kim et al., 2018), (Kim et al., 2014), (Kim et al., 2017), (Oh et al., 2017).

Concept of classical image quality assessment. Even when various distortions exhibit the same mean squared errors, the perceived image quality can differ significantly among them.

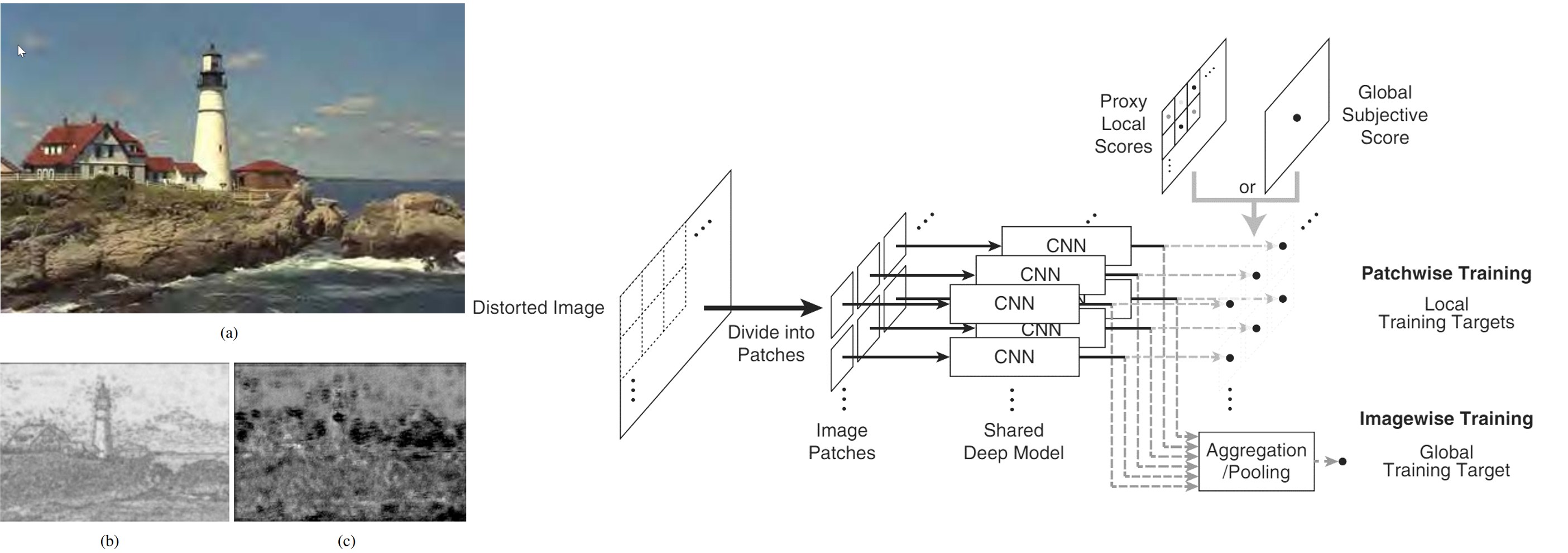

Deep image quality assessment by employing pseudo ground-truth data.

References

2019

-

Deep CNN-Based Blind Image Quality Predictor

Jongyoo Kim, Anh-Duc Nguyen, and Sanghoon Lee

IEEE Transactions on Neural Networks and Learning Systems, Jan 2019

Image recognition based on convolutional neural networks (CNNs) has recently been shown to deliver the state-of-the-art performance in various areas of computer vision and image processing. Nevertheless, applying a deep CNN to no-reference image quality assessment (NR-IQA) remains a challenging task due to critical obstacles, i.e., the lack of a training database. In this paper, we propose a CNN-based NR-IQA framework that can effectively solve this problem. The proposed method—deep image quality assessor (DIQA)—separates the training of NR-IQA into two stages: 1) an objective distortion part and 2) a human visual system-related part. In the first stage, the CNN learns to predict the objective error map, and then the model learns to predict subjective score in the second stage. To complement the inaccuracy of the objective error map prediction on the homogeneous region, we also propose a reliability map. Two simple handcrafted features were additionally employed to further enhance the accuracy. In addition, we propose a way to visualize perceptual error maps to analyze what was learned by the deep CNN model. In the experiments, the DIQA yielded the state-of-the-art accuracy on the various databases.

2018

-

Deep Blind Image Quality Assessment by Learning Sensitivity Map

Jongyoo Kim, Woojae Kim, and Sanghoon Lee

In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Jan 2018

-

Deep Video Quality Assessor: From Spatio-Temporal Visual Sensitivity to a Convolutional Neural Aggregation Network

Woojae Kim, Jongyoo Kim, Sewoong Ahn, and 2 more authors

In European Conference on Computer Vision, Jan 2018

-

Multiple Level Feature-Based Universal Blind Image Quality Assessment Model

Jongyoo Kim, Anh-Duc Nguyen, Sewoong Ahn, and 2 more authors

In IEEE International Conference on Image Processing (ICIP), Jan 2018

2017

-

Deep Convolutional Neural Models for Picture-Quality Prediction: Challenges and Solutions to Data-Driven Image Quality Assessment

Jongyoo Kim, Hui Zeng, Deepti Ghadiyaram, and 3 more authors

IEEE Signal Processing Magazine, Nov 2017

Convolutional neural networks (CNNs) have been shown to deliver standout performance on a wide variety of visual information processing applications. However, this rapidly developing technology has only recently been applied with systematic energy to the problem of picture-quality prediction, primarily because of limitations imposed by a lack of adequate ground-truth human subjective data. This situation has begun to change with the development of promising data-gathering methods that are driving new approaches to deep-learning-based perceptual picture-quality prediction. Here, we assay progress in this rapidly evolving field, focusing, in particular, on new ways to collect large quantities of ground-truth data and on recent CNN-based picture-quality prediction models that deliver excellent results in a large, real-world, picture-quality database.

-

Deep Blind Image Quality Assessment by Employing FR-IQA

Jongyoo Kim, and Sanghoon Lee

In IEEE International Conference on Image Processing (ICIP), Nov 2017

-

Deep Learning of Human Visual Sensitivity in Image Quality Assessment Framework

Jongyoo Kim, and Sanghoon Lee

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nov 2017

-

Fully Deep Blind Image Quality Predictor

Jongyoo Kim, and Sanghoon Lee

IEEE Journal of Selected Topics in Signal Processing, Feb 2017

In general, owing to the benefits obtained from original information, full-reference image quality assessment (FR-IQA) achieves relatively higher prediction accuracy than no-reference image quality assessment (NR-IQA). By fully utilizing reference images, conventional FR-IQA methods have been investigated to produce objective scores that are close to subjective scores. In contrast, NR-IQA does not consider reference images; thus, its performance is inferior to that of FR-IQA. To alleviate this accuracy discrepancy between FR-IQA and NR-IQA methods, we propose a blind image evaluator based on a convolutional neural network (BIECON). To imitate FR-IQA behavior, we adopt the strong representation power of a deep convolutional neural network to generate a local quality map, similar to FR-IQA. To obtain the best results from the deep neural network, replacing hand-crafted features with automatically learned features is necessary. To apply the deep model to the NR-IQA framework, three critical problems must be resolved: 1) lack of training data; 2) absence of local ground truth targets; and 3) different purposes of feature learning. BIECON follows the FR-IQA behavior using the local quality maps as intermediate targets for conventional neural networks, which leads to NR-IQA prediction accuracy that is comparable with that of state-of-the-art FR-IQA methods.

-

Quality Assessment of Perceptual Crosstalk on Two-View Auto-Stereoscopic Displays

Jongyoo Kim, Taewan Kim, Sanghoon Lee, and 1 more author

IEEE Transactions on Image Processing, Feb 2017

-

Blind Deep S3D Image Quality Evaluation via Local to Global Feature Aggregation

Heeseok Oh, Sewoong Ahn, Jongyoo Kim, and 1 more author

IEEE Transactions on Image Processing, Feb 2017

2014

-

Quality Assessment of Perceptual Crosstalk in Autostereoscopic Display

Jongyoo Kim, Taewan Kim, and Sanghoon Lee

In IEEE International Conference on Image Processing (ICIP), Feb 2014