Multimedia Generation & Editing

Computer vision algorithms using generative models

Research in generative models has earned a significant attention following the remarkable success of models like ChatGPT and image generation services. Recently, PI has been engaged in developing diffusion model-based text-to-image inpainting algorithms for Microsoft Designer. Moreover, PI has substantial experience with traditional generative models such as variational autoencoders (VAEs), GANs (Generative Adversarial Networks), and their variants within projects focused on face and human image synthesis. We will research across generating various modalities (image, 3D, motions, and so on) and applications.

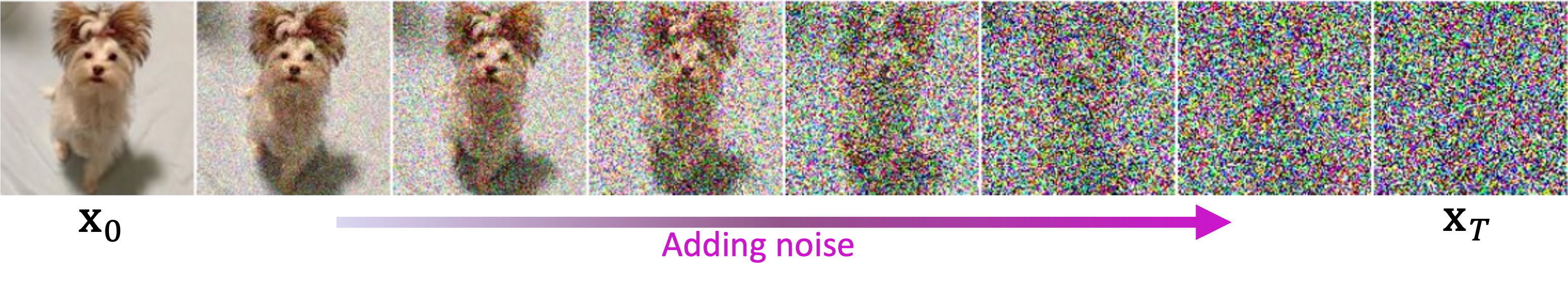

Diffusion process for image generation. Diffusion models have multiple denoising steps to generate high-fidelity images.

Overview of identity preserving inpainting.

Example results of identity preserving inpainting.

Diffusion-based image semantic analysis.