Diffusion-/Flow-based Generation

Modeling data distributions via diffusion processes and flow matching for efficient generative learning.

🎬 Project Overview

This project explores generative models based on diffusion processes and flow-based learning, aiming to efficiently model complex data distributions for high-quality image and video generation.

🧠 Research Focus

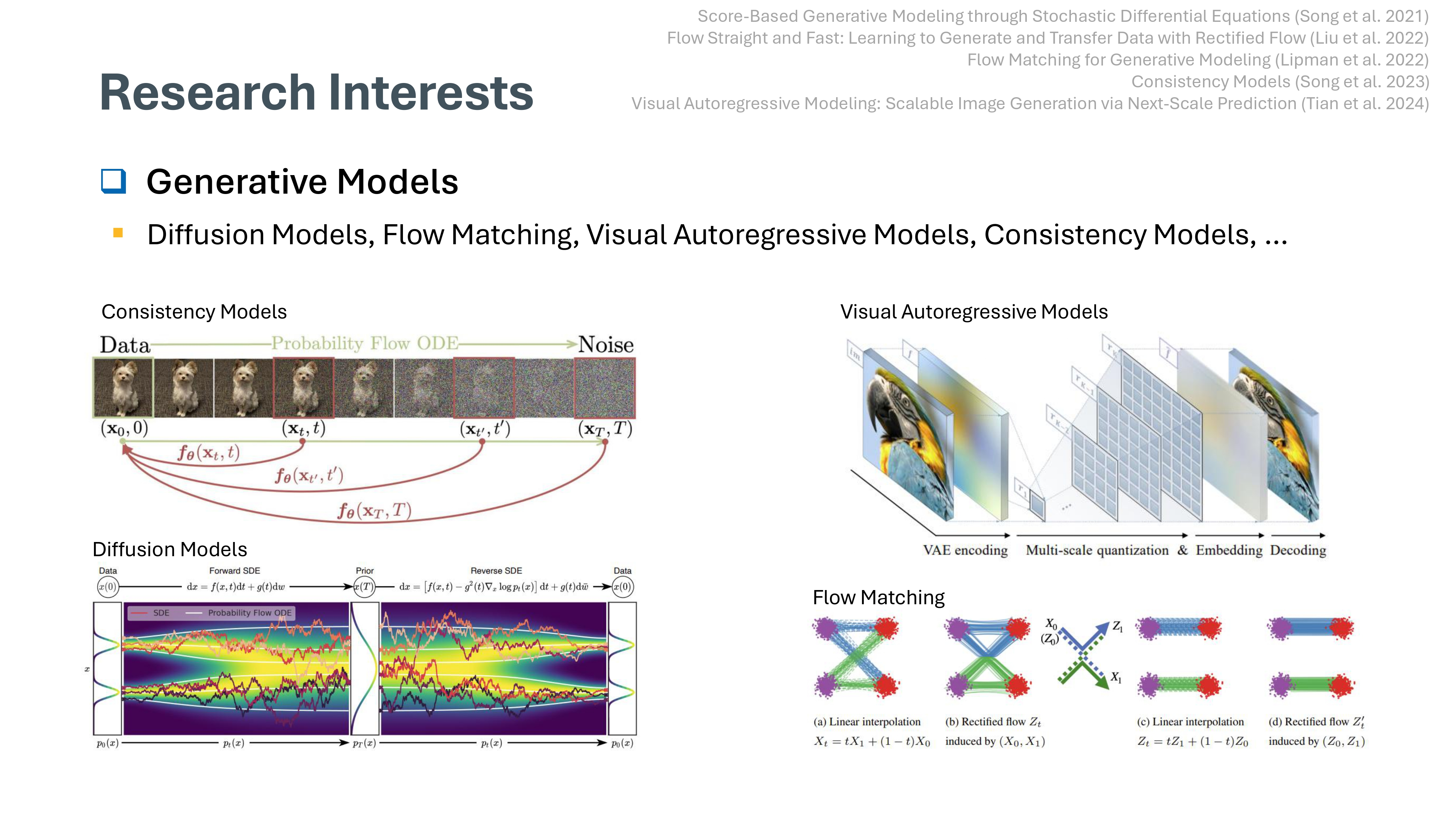

We investigate various generative paradigms, including:

- Diffusion Models

- Flow Matching

- Visual Autoregressive Models

- Consistency Models

These models offer frameworks for learning the underlying probabilistic structure of visual data.

The figure above summarizes key architectures and landmark papers that form the foundation of each approach.

🧪 Research Contributions

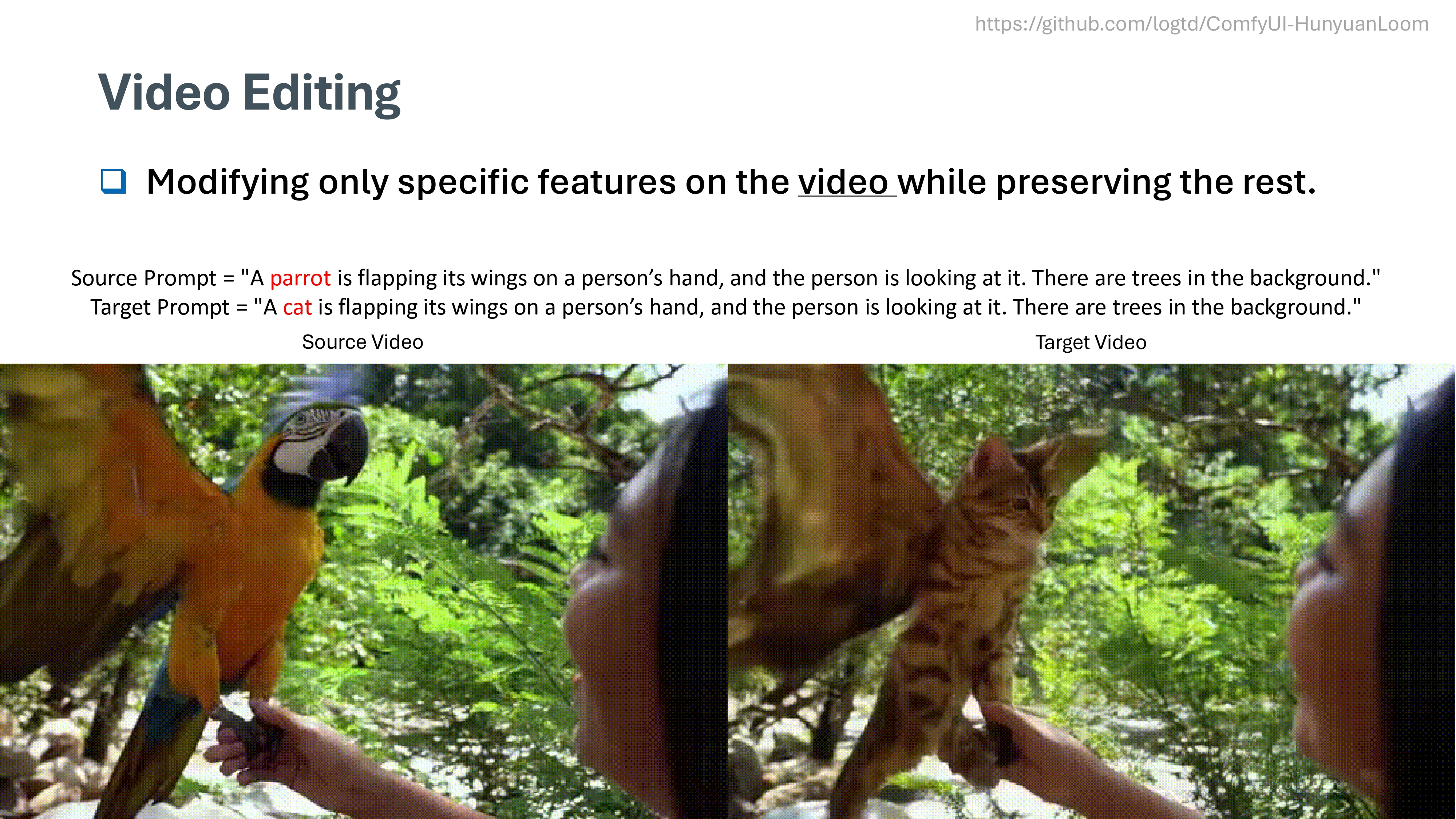

We develop controllable generative techniques for editing content across both images and videos, with a strong emphasis on localized, prompt-driven editing.

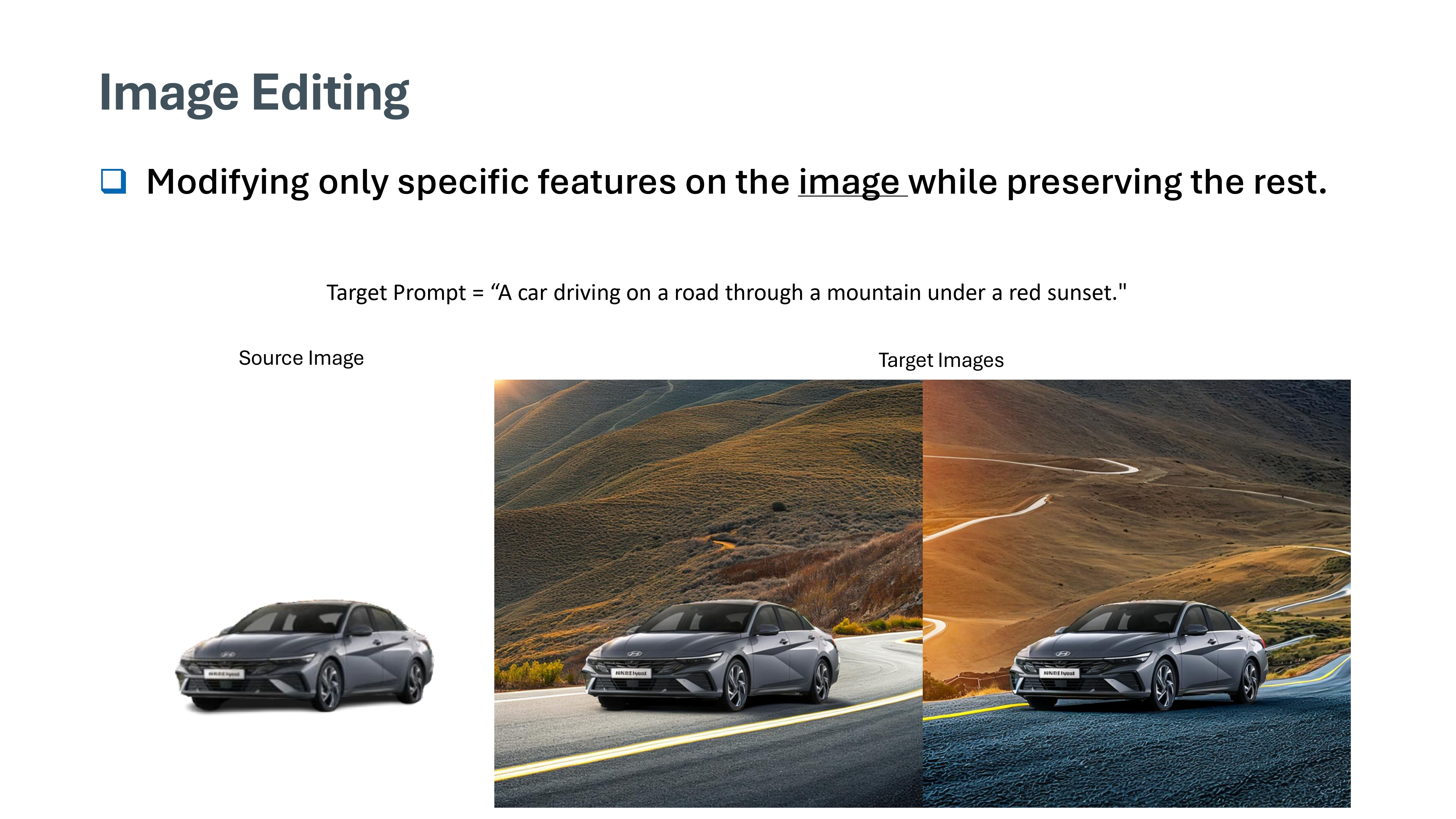

✨ Image Editing

Text-guided diffusion allows fine-grained changes (e.g., modifying weather or style) without affecting the main object’s identity.🎞️ Video Editing

Temporal consistency is maintained across frames, allowing for semantically meaningful changes (e.g., replacing a moving object) while preserving motion and background integrity.Our work builds on cutting-edge tools like latent diffusion, cross-attention control, and zero-shot video editing frameworks.